The Invisible Bird

Showcase

ITP/IMA Winter Show 2018

Tools

After Effects, Arduino, MadMapper, Node.js, p5.js, Projection, Socket IO, Twitter API, Parallel Dot API

Role

Lead Developer, Interaction Design, Fabrication

Brief

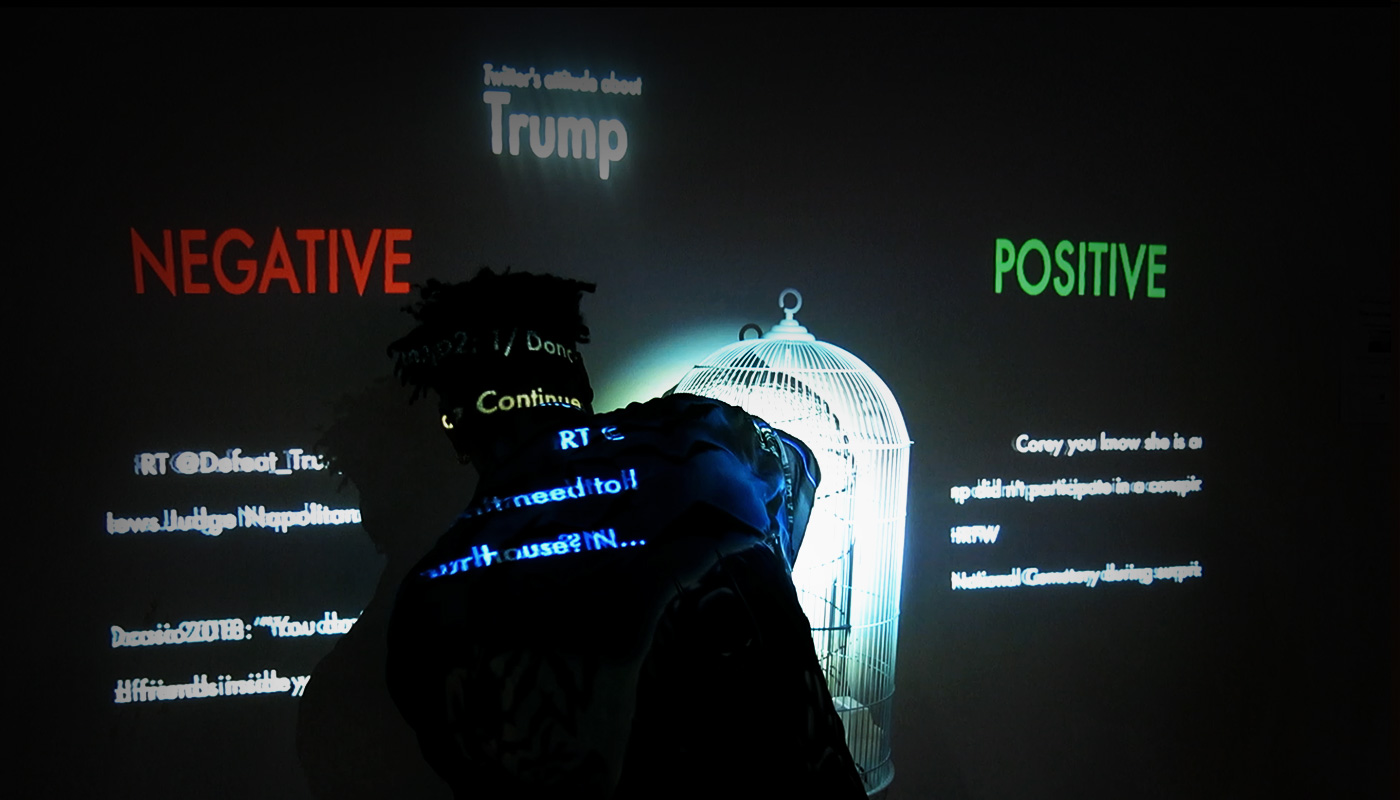

The Invisible Bird is an interactive AI installation aiming to expose the invisible thought cages we’ve built around ourselves. It uses a machine learning algorithm called Sentiment Analysis to analyze tweets on Twitter, generalize the public's attitudes about those tweets under different topics, and let people interact with them. It is a project co-created by me & Tanic Nakpresha at NYU ITP.

Concept

The concept of this project is born from a late night talk between Tanic and I when we discussed about the impact of social media on our life. Nowadays, people spent tons of hours per day on social media, with or without noticing how their opinions and decision making process have been shaped in an insidious way.

In response, we think it would be interesting to build a mechanism to remind people of the opinion bubbles they live in, by allowing them to physically interact with one of the social media behemoths. In our case, we decided to use Twitter as our target platform, as summarized in the following video:

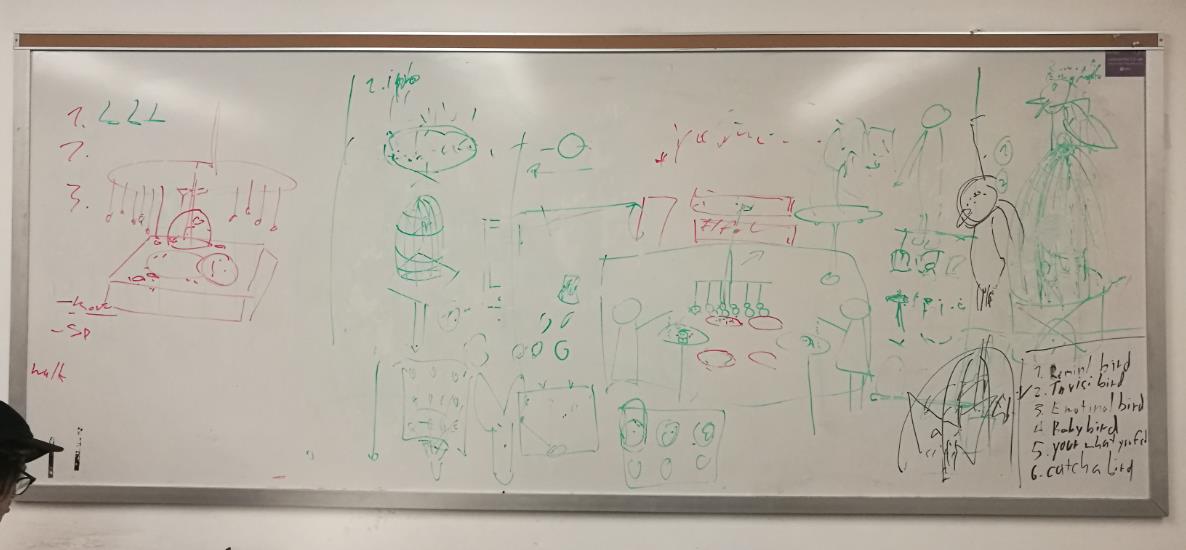

Ideation

Once we’d decided to make an installation piece that allows people to interact with data on Twitter and reflect on it, we began to ideate possible interactions. Our goal is to engage users in a way that:

- can relate them to the source of data they’re playing with, which is Twitter;

- is aesthetically pleasing and aligns with the Twitter bird metaphor;

- has different layers, in order to encourage multiple rounds of interaction, yet as simple and intuitive as possible to avoid confusion;

- is technically feasible given the 1 month timeframe

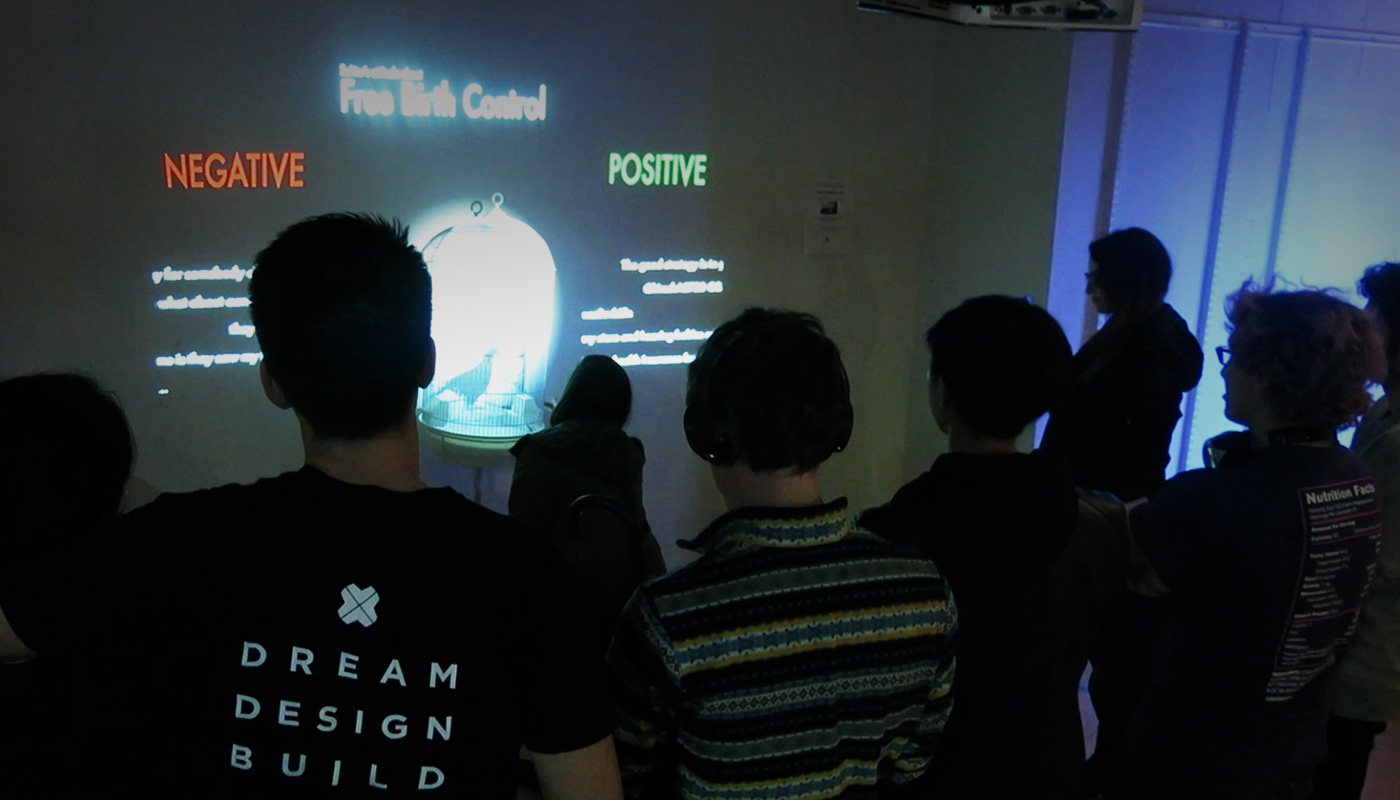

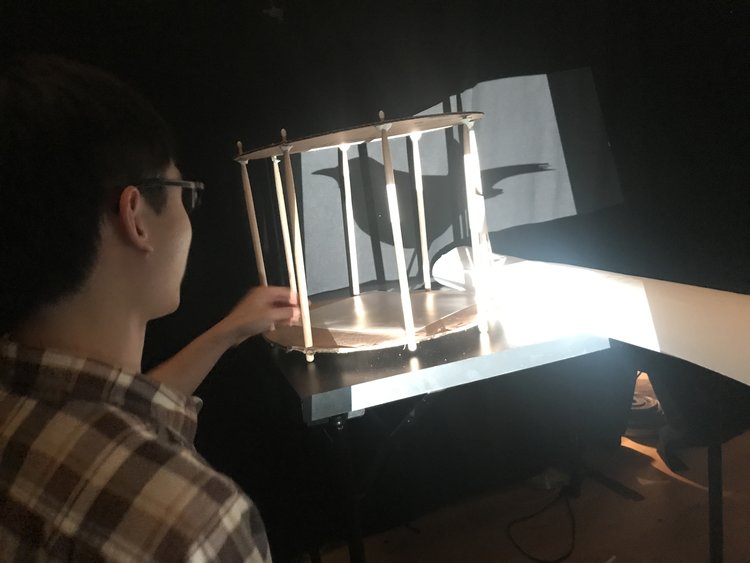

After several brainstorming sessions, we landed on the shadow play idea that would allow users to interact with an invisible Twitter bird in a bird cage. The invisible bird will represent a topic or a hashtag on Twitter, and the user will need to guess whether the public’s attitude about that topic is mostly positive or negative. The bird will then react according to the user’s input, allowing the user to reflect on that topic based on her/his guessing result.

Prototype

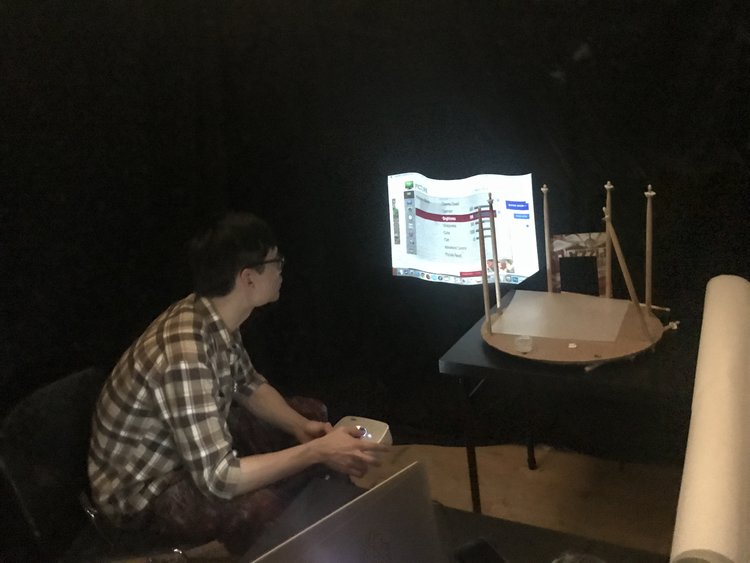

After the ideation, we designed and built our first prototype to testify the concept. The goal of prototyping is to find out:

- the optimal size of the physical interface - the bird cage;

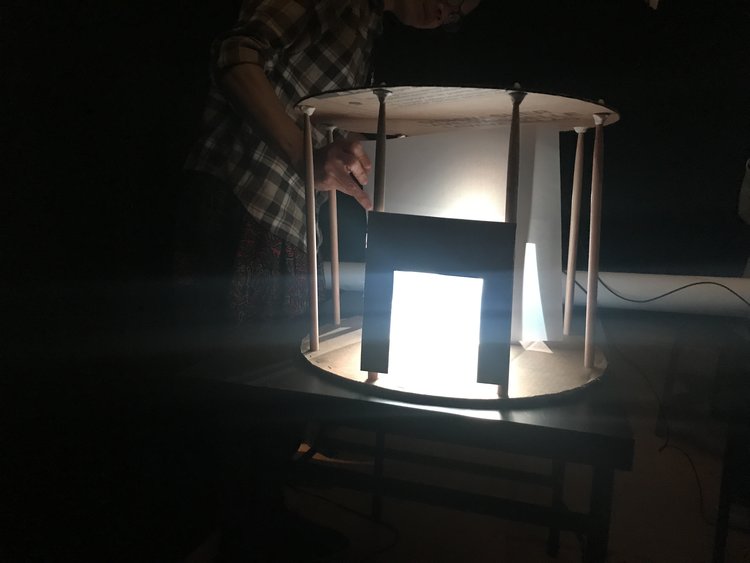

- the best way to create the illusion of an invisible bird using shadow (e.g. projection vs. an actual shadow of bird-shape objects);

- the requirements of environment for this piece, provided with different ways of shadow creation;

- interactions that are both intuitive under the “bird context” and functional as user inputs to make a guess;

- information that should be provided to facilitate guess making;

- the overall interaction flow

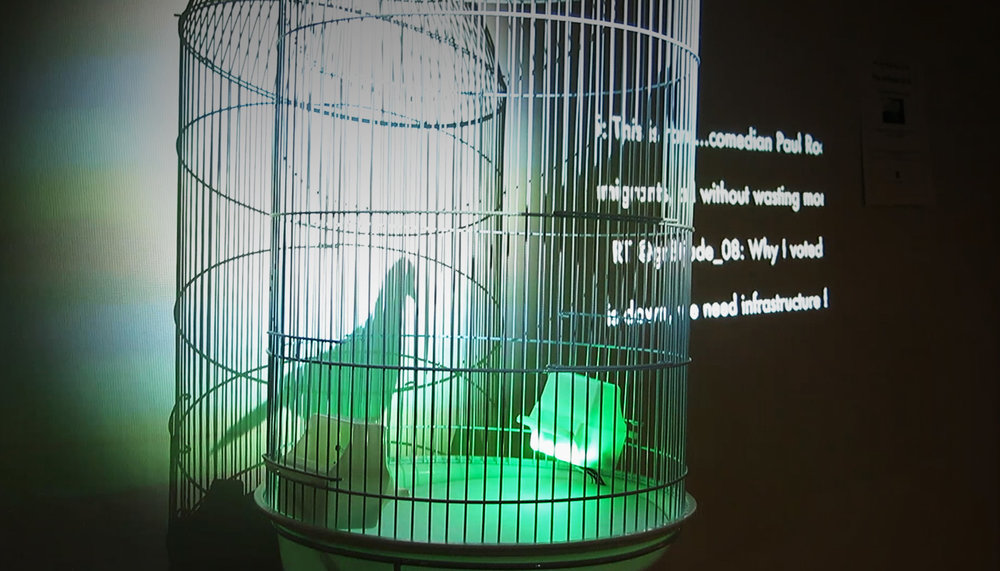

We built a table size bird cage with cardboards, and tried out different ways to create the bird shadow. While using projectors to cast a bird shadow appeared to be the most effective and feasible option, the position of light source (e.g. front vs. rear projection) and the position and pose of the user (e.g. at the front or at the sides, standing vs. sitting) created different effects and permitted/prohibited different user actions and physical setup.

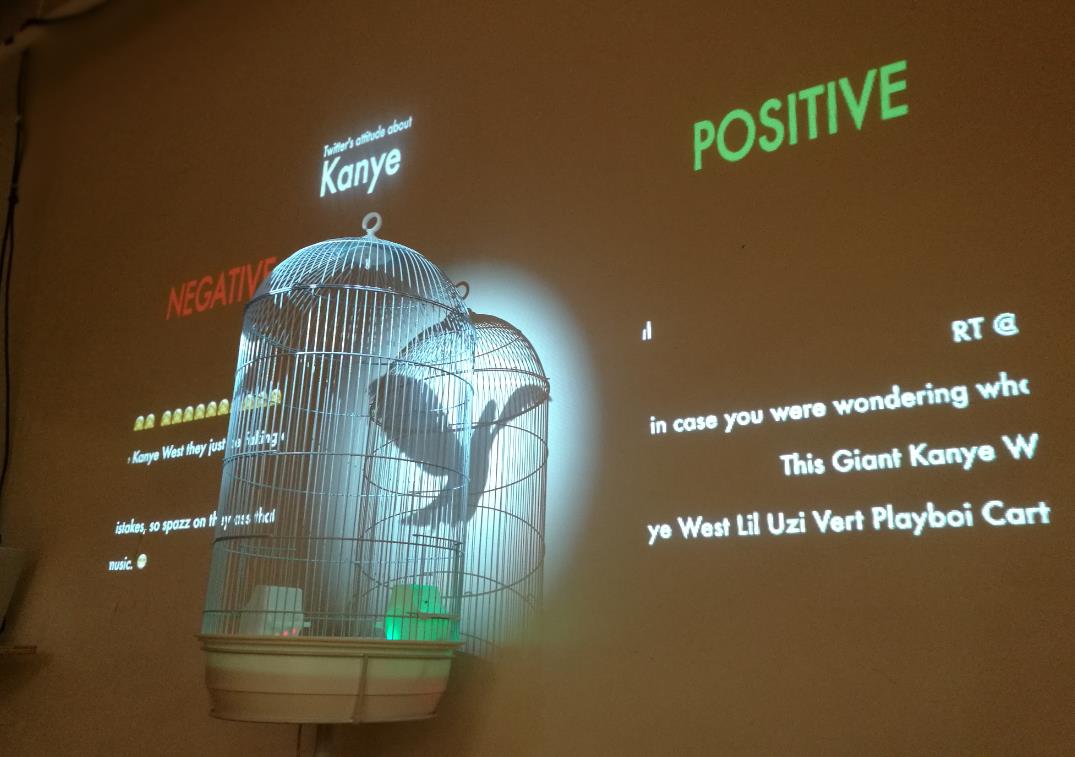

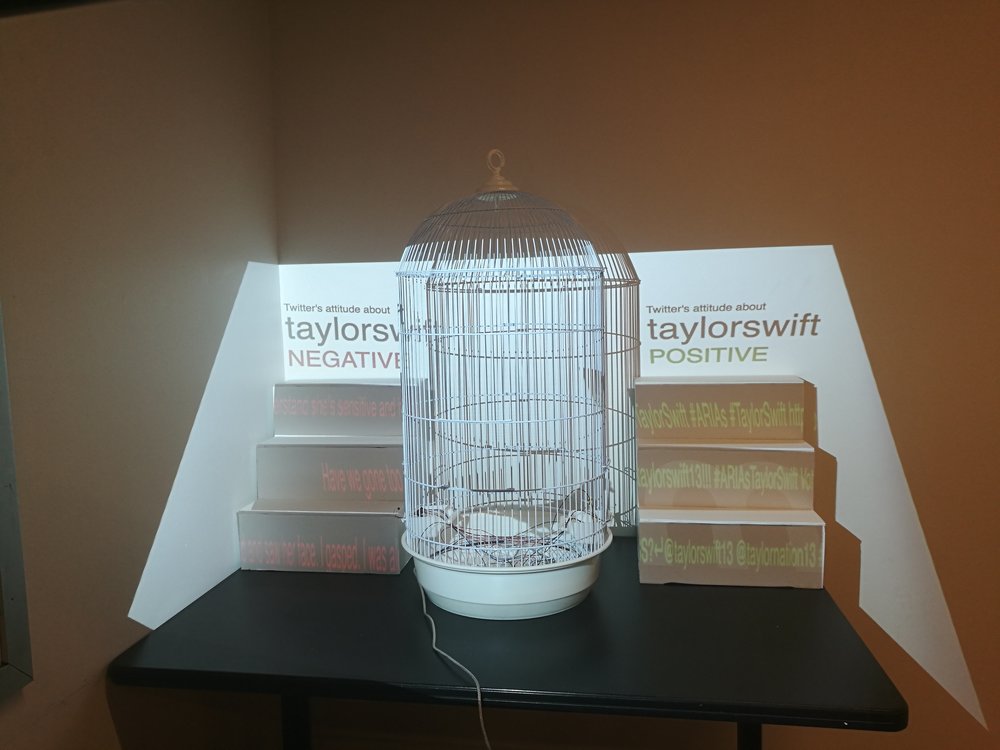

After some attempts and adjustments, we balanced the pros and cons of different options, and we decided to use front projection to cast a bird shadow and a topic text onto a backdrop behind the birdcage. We also put two paper-made stairs by each side of the cage, so that we could project green positive tweets and red negative tweets about the chosen topic to give the user some reference if she/he is not familiar with that topic.

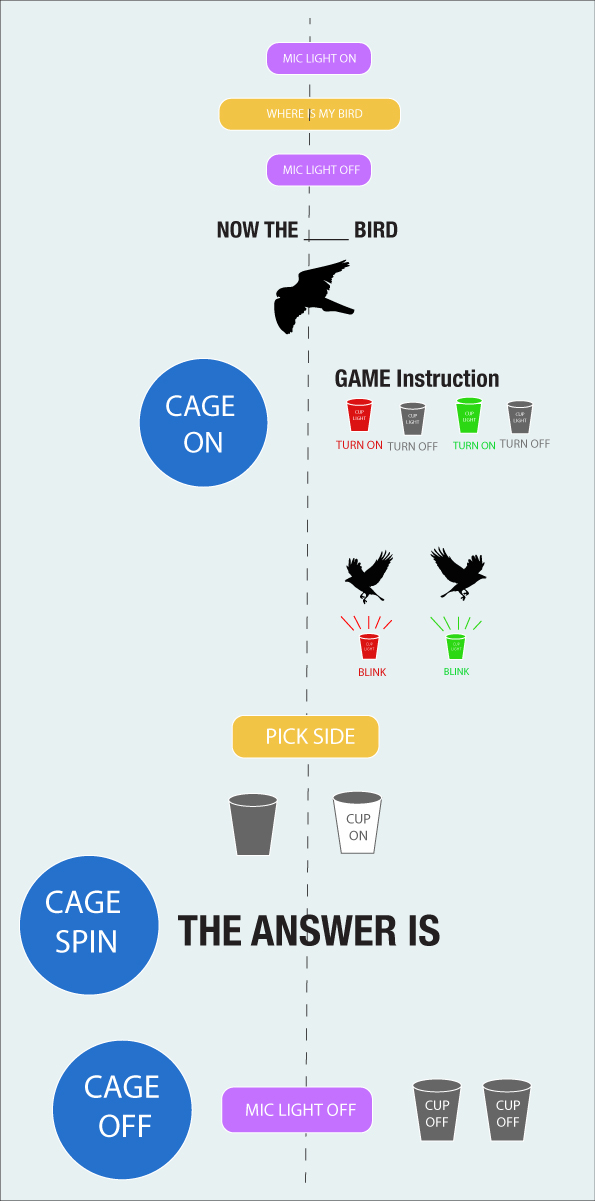

1. To trigger the interaction, the user will speak the sentence “Where’s my bird?” into a microphone to summon an invisible Twitter bird about a specific topic into the bird cage.

2. Then, we would let the user to hover her/his hand on one of the bird food containers located at either side of the cage to indicate a positive or a negative guess.

3. After that, the user will observe how the bird shadow reacts (correct: bird eats the food; wrong: bird poops and flies away) to figure out whether their guessing is correct or not.

The figure on the right shows how the bird cage reacts according to user inputs.

User Testing

We ran several rounds of user testings to collect feedbacks, and most of them were focused on the following aspects:

Problem: The audio interface happened at the front (we put the microphone at the front), while the tangible interface happened at the back (the cage & the projection backdrop are located behind the microphone). This created a discrepancy between the initial voice input and subsequent interactions, and required additional effort for the users to move on to the next step in the interaction flow.

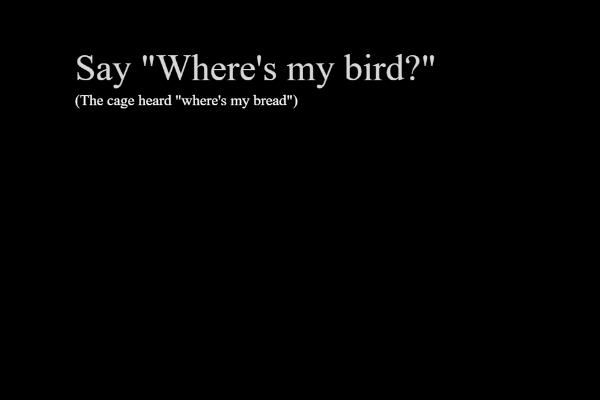

Solution: To allow the shift of attention smoother for the users, we decided to push the cage backwards (making it closer to the projection plane) and incorporate the microphone inside the cage. In this case, all interface are aligned at the same place, and users will be focused on the interaction with the cage. Also, we displayed speech recognition result to the users (The cage heard XXX), so that they can have immediate feedback of their voice input and thereby not losing patience.

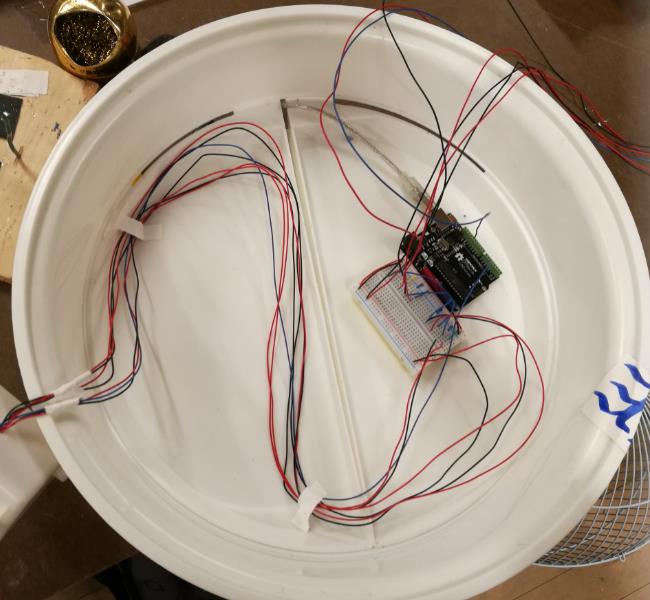

Problem: We used light sensors to detect which food container the user hovered her/his hand on. As the size of the light sensor was relatively small compared to the food container, it happened from time to time when its reading changes were not significant enough to capture a guessing intention.

Solution: We doubled the amount of light sensors at both containers, and rearranged their spatial layout. In this way, no matter how the user’s hand approach the container, at least one of the sensors will pick out the action and trigger a guessing behavior. Also, instead of hovering the hand over the container, we would ask the users to actually feed the bird with an almond by throwing the almond into one of the food container. In this way, not only the users felt more like interacting with a real bird, the feeding action also added to the chance of intention capturing since it increased the time that the users’ hand was staying around the sensors.

Problem: Since the projected tweets were in green and red colors, and they were projected onto the stairs on both sides of the cage (which looks rectangular), they confused people with the “green & red boxes” mentioned in the voice instructions during the guessing step.

Solution: We removed the green and red colors of the projected tweets, and only keep the colors for the food containers of the cage. Also, we changed the voice instructions to ask the users to “feed in the green/red food container”.

ITP/IMA Winter Show 2018

Our piece was selected as part of the ITP/IMA Winter Show 2018, and we were honored to have over 300 visitors interacted with our invisible bird within the two-day time frame. The reception of this piece varied: some visitors were amazed by the visual, some appreciated the humor of the bird’s reactions, and some were upset by the selection of some controversial topics. In all, we believed we’ve succeeded in making people think outside of their thought cages.